Get started

Basic setup example

HTML

The HTML set up is pretty easy. Just create a div that will hold your canvas and a div that will hold your images.

CSS

The CSS is also very easy. Make sure the div that will wrap the canvas fits the document, and apply any size you want to your plane div element.

Javascript

There's a bit more work in the javascript part : we need to instanciate our WebGL context, create a plane with basic uniforms parameters and use it.

Shaders

Here are some basic vertex and fragment shaders. Just put it inside your body tag, right before you include the library.

Et voilà !

About the plane's attributes : aVertexPosition and aTextureCoord

In our basic example above, we've passed the two plane's attributes aVertexPosition and aTextureCoord as vVertexPosition and vTextureCoord varyings to our fragment shader, even tho we did not really use vVertexPosition in the end. But what are those attributes and what do they mean?

aVertexPosition: the plane vertices coordinates

Those are the plane's original vertices positions. Multiplying them by the projection and model view matrices in our vertex shader correctly position our plane in the world space:

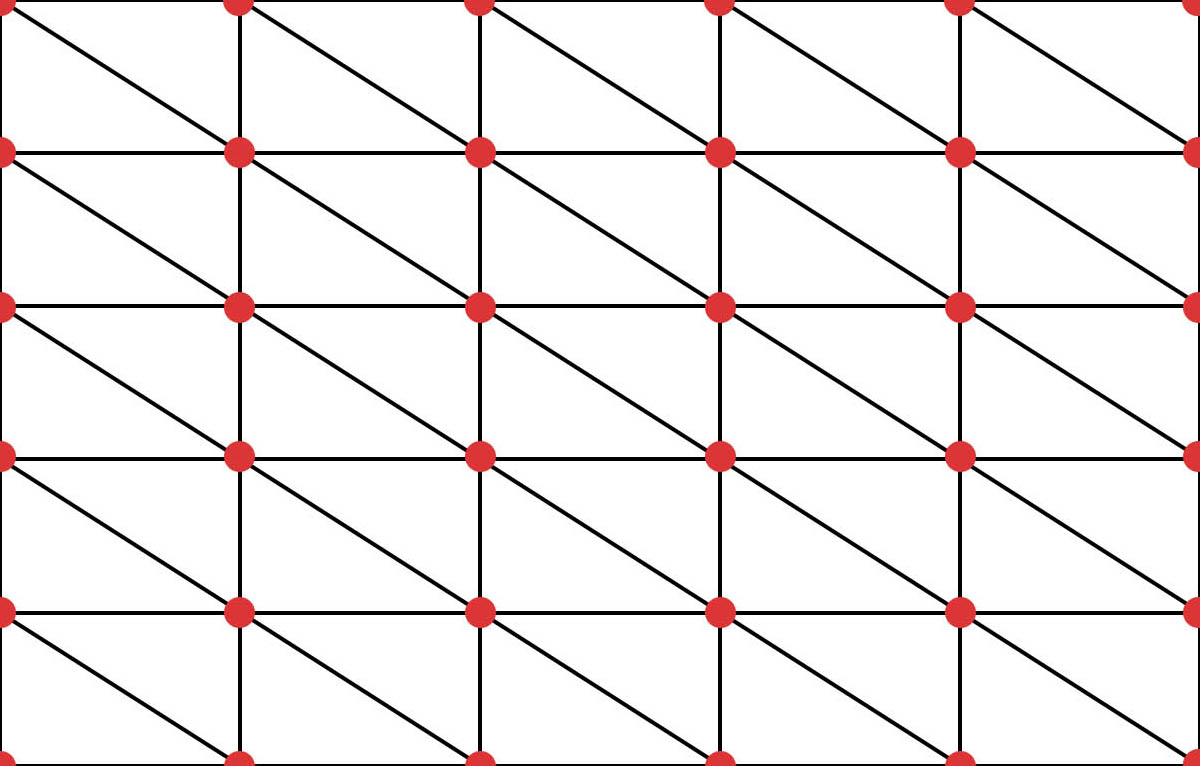

The plane's vertices positions are ranging from -1 to 1 on both X and Y axis (Z value is equal to 0 by default), [-1, -1] being the bottom left corner while [1, 1] is the top right corner, whatever its size, position and scale.

Here is an example where we animate the plane vertices Z position using a sinusoidal function, based on its coordinates along the X axis and a time uniform:

{x: -1, y: -1}

{x: 1, y: -1}

{x: -1, y: 1}

{x: 1, y: 1}

{x: -1, y: -1}

{x: 1, y: -1}

{x: -1, y: 1}

{x: 1, y: 1}

aTextureCoord: the plane texture coordinates

Those are defining the plane's texture coordinates. They are ranging from 0 to 1 on both X and Y axis, [0, 0] being the bottom left corner while [1, 1] is the top right corner.

Texture coordinates could be helpful to draw geometric shapes, gradients and such.

Here is an example where the RGB values of our displayed color is based on the Y component of our texture coordinates:

Using texture matrices to pass texture coordinates as a varying

Another thing you need to understand is that when you multiply your texture coordinates with a texture matrix, they won't necessarily be ranging from 0 to 1 on both axis any more, since they will actually be scaled to correctly map your texture:

If you want to correctly map you texture and also get accurate texture coordinates at the same time (ie to draw geometric shapes for example), consider passing 2 varyings:

What about gl_FragCoord then?

In some examples online, such as the one in the Book of Shaders, you'll see the texture coordinates (also called UV, or ST due to some naming conflict) being calculated using the gl_FragCoord fragment shader global variable and the resolution uniform:

Here, gl_FragCoord.xy is the pixel coordinate along X and Y axis along the canvas.

If your plane has the same size and position as your rendering canvas, you might use this method to compute your coordinates. But if it doesn't, you'll have to take into account the plane's offset, leading to unnecessary calculations.

You should therefore always use the built-in aTextureCoord attributes.

Textures uniforms matrices and sampler names

Let's say you want to build a slideshow with 3 images and a displacement image to create a nice transition effect.

By default, the textures uniforms matrices and sampler will be named upon their indexes inside your plane element. If you got something like that :

Then, in your shaders, your textures matrices and samplers would have to be declared that way :

It is handy but you could also get easily confused.

By using a data-sampler attribute on the <img /> tag, you could specify custom uniforms matrices and samplers names to use in your shaders. With the example above, this would become :

Handling scroll event

By default since version 4.0 your planes positions are now updated while you scroll the page. You can define each plane ability to update its position according to the scroll by setting its own watchScroll property.

This means that the library automatically listens to the scroll event. But you might not want to listen to that event at all and handle this by yourself:

Doing it your own way

If you don't want the library to listen to the scroll under the hood, just set the watchScroll parameter to false when initializing your Curtains object. You will then have to listen to the scroll by yourself and update your curtains object scroll values manually.

This could be pretty handy if you're using a virtual scroll library for example like here: Multiple planes scroll effect with Locomotive scroll.

Note that this is how the library handles the scroll internally by default.

This is the most performant way to keep your whole scene in sync with the scroll as it requires only one layout repaint call.

Using videos as textures

Yes, videos as textures are supported ! However there are a few downsides you need to know.

First, you might encounter some trouble trying to autoplay videos without a user gesture on most mobile devices. Unless you don't care about mobile users, you will have to start the videos playback after a user interaction like a click event.

Also, please note that videos tend to use a lot of memory and could have a significant impact on performance, so try to keep them small.

Besides that, videos are really easy to use (and can be mixed with images as well). Let's see how we can handle them :

HTML

Like with images, you can use a data-sampler attribute to set a uniform sampler name. You can use one or more videos, or mixed them with images if you want :

Javascript

There's only one change inside our javascript : we need to tell our plane when to start playing the videos. We've got a playVideos method that we will put inside an event listener in our onReady method :

And that's it. Check the video examples (and source codes) if you want to see what's possible.

Using canvas as texture

Last but not least, you can use a canvas as a texture. It is once again really easy to use. You just have to insert a canvas tag inside your HTML, or eventually create it in your javascript and load it using the loadCanvas method.

HTML

You can use multiple canvases and data-sampler attributes as well, like you'd do with images or videos.

Javascript

The javascript code remains almost the same. We just set the size of our canvas, get its context and draw a simple rotating red rectangle inside our animation loop.

Adding post-processing

You can add post-processing to your scene by using a ShaderPass object. It uses FBO (short for Frame Buffer Objects) under the hood and allows some really cool effects.

Post-processing shaders are a bit different than plane shaders. They do not have any projection or model view matrix and they also silently create a render texture that will hold our scene (called uRenderTexture in our fragment shader).

Here are some very basic vertex and fragment shaders example, that will use the same effect as our basic plane example seen above.

Post processing vertex shader

Post processing fragment shader

You can also load images, videos or canvases into your shader pass, as you'd do with a regular plane.

Finally, if you want to apply your shader pass to just a set of planes instead of your whole scene, you'll have to pass a RenderTarget element as a parameter. See Assigning a RenderTarget to a ShaderPass.

Performance tips

- If you experience a small sync latency between planes and DOM elements on mobile while scrolling, here's a GitHub discussion explaining how to solve it.

- Try to use only one global request animation frame loop and one scroll event listener in your application. You could either hook to the library onRender() and onScroll() events or handle this by yourself by not instancing them in the first place thanks to the init params.

- Disable and reenable the scene drawing each time you can. This can save a lot of performance and battery usage when your WebGL scene is idling.

- You can try to use a rendering scale lower than 1 to improve performance (see the Curtains class init parameters). This will however decrease the rendering quality.

- Always add a crossorigin attribute to your images and videos HTML elements. It will prevent them from being loaded a second time when it is uploaded to the GPU.

- Plane's canvases textures are updated at each frame (videos are updated each time a new frame is available), which has a significant impact on performance. When those textures are not visible (if they are hidden by another texture, or if you have finished drawing on your canvas...), you should set those textures shouldUpdate property to false, and switch it back to true before displaying them again.

- Large images have a bigger impact on performance. Try to scale your images so they will fit your plane maximum size. It goes the same for videos of course: try to keep them as light as possible.

-

Render targets (and therefore shader passes) disable the WebGL context default antialiasing. If you use them, you should set the antialias Curtains property to false when initiating your context.

You might then want to add an extra antialiasing pass, like a FXAAPass. See the Post processing scrolling wheel with custom transform origin for an example of how to add one. - Shader passes could be expensive, use them with caution.

- Try to use as less javascript as possible in the onRender() methods as this get executed at each draw call.

Useful examples

Those examples might seem a little rough: they don't use any tweening or animation engine to keep the code centered around the library usage itself. You should definitely consider using easings in your own animations.

Do not hesitate to check their source code as they are all fully commented:

Images

Vertex coordinates helper

Plane properties and transformations cheat sheet

Simple plane

Slideshow with a displacement shader

Multiple planes

Multiple planes scroll effect : rotation, scale and parallax

Asynchronous textures loading

AJAX navigation with plane removal

Videos

Simple video plane

Multiple video textures with a displacement shader

Canvas

Simple canvas plane

Text planes using canvas

Post processing

Post processing displacement effect

Post processing multiple passes

Post processing scrolling wheel with custom transform origin

Advanced render targets usage

Ping pong shading / FBOs swapping flowmap example

Selective shader passes using render targets

GSAP click to fullscreen gallery

Custom scroll

Additional ressources

- Github

- CSS Tricks tutorial

- Codrops WebGL Video Transitions with Curtains.js

- Creating WebGL Effects with CurtainsJS on CSS Tricks

- WebGL drag slider complete step-by-step tutorial on CodePen: part 1: creating the slider, part 2: adding the WebGL, part 3: improving performance

- CodePen curtains.js collection